Explorable Interactive Human Reposing

Interactive reposing

Interactive reposing

Design

-

We first allow the user to read an image and we display the uploaded image to the user.

-

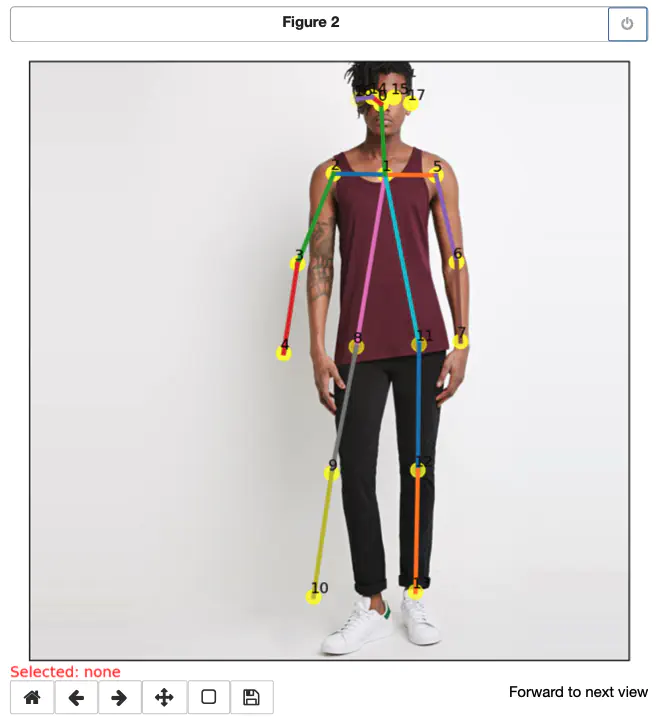

We then extract the pose from the body joint detection algorithm (OpenPose (https://github.com/Hzzone/pytorch-openpose)) and get two arrays (subset and candidate) representing the pose.

-

We map these ambiguous arrays (subset and candidate) into a user understandable body joint skeleton and display them.

-

We make the joints interactable, where users can drag and drop joints.

-

We allow the user to click “replot” to get the final pose they want.

-

We map the edited pose back into the ambiguous arrays (subset and candidate) to be passed into the reposing model (CoCosNet-v2 (https://github.com/microsoft/CoCosNet-v2)) to synthesize the final reposed image.

-

We allow the user to use an image whose pose they like for pose extraction.

-

We add “evaluate” button that will evaluate the accuracy of respecting the desired pose by utilizing the following metrics:

- Average keypoint distance (AKD): the average distance between the pose keypoint of the output image and the input pose keypoint.

- Missing keypoint rate (MKR): the number of pose keypoints not detected in the generated image.